Solving the Data Bottleneck: LabV’s Six-Week Path to Real-Time Insight and Quality Control

Labs generate more data than they can easily use. The result? Manual workflows and fragmented tools slow down decisions. LabV, a lab data management company, decided to design a system that makes it faster to identify issues and communicate them clearly—both internally and externally.

LabV worked with Wolfram Consulting to build a digital assistant for real-time lab data analysis. A single prompt now surfaces trends that once took hours to uncover. In one case, it revealed a correlation between density and heat resistance—guiding product decisions without manual analysis or coding. This made LabV one of the first AI-powered solutions for material testing labs, combining AI’s fluency with a computational foundation so every output is both fast and verifiable.

The Prerequisites for Smarter Analysis

Meeting regulatory standards requires collecting and analyzing hundreds of data points across batches and suppliers. But LabV’s instruments weren’t fully connected to its laboratory information management system (LIMS), and much of the data still lived in spreadsheets. Even routine retrieval was slow. Deeper comparisons—like tracking material behavior by supplier—were often skipped entirely.

While generative AI tools were readily available, they couldn’t access LabV’s proprietary datasets or guarantee accurate answers. Without a way to ground responses in the lab’s own data, AI risked producing results that looked plausible but couldn’t be trusted. Research shows that disorganized, siloed data delays batch approvals, extends development cycles and drives up costs by consuming engineering hours in redundant work.

More fundamentally, the fragmented system made automation impossible. Traditional LIMS platforms weren’t designed for complex data integration or analysis at scale. To move forward, LabV had to build a centralized, structured dataset—one capable of supporting real-time decisions and enabling smarter tools. Without that foundation, AI was just out of reach.

Making Data Usable at Scale

LabV couldn’t move forward until it fixed its data layer. Traditional LIMS systems aren’t built to connect every instrument or consolidate outputs. Wolfram helped create a unified framework where test results could be collected, structured and searched—laying the groundwork for automation and eventual AI-powered analysis.

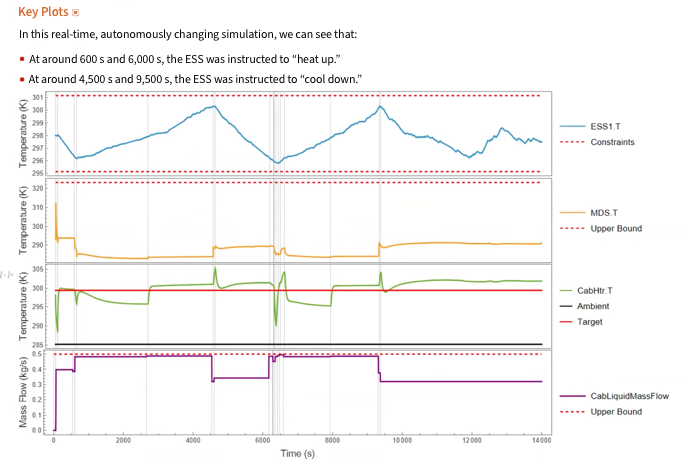

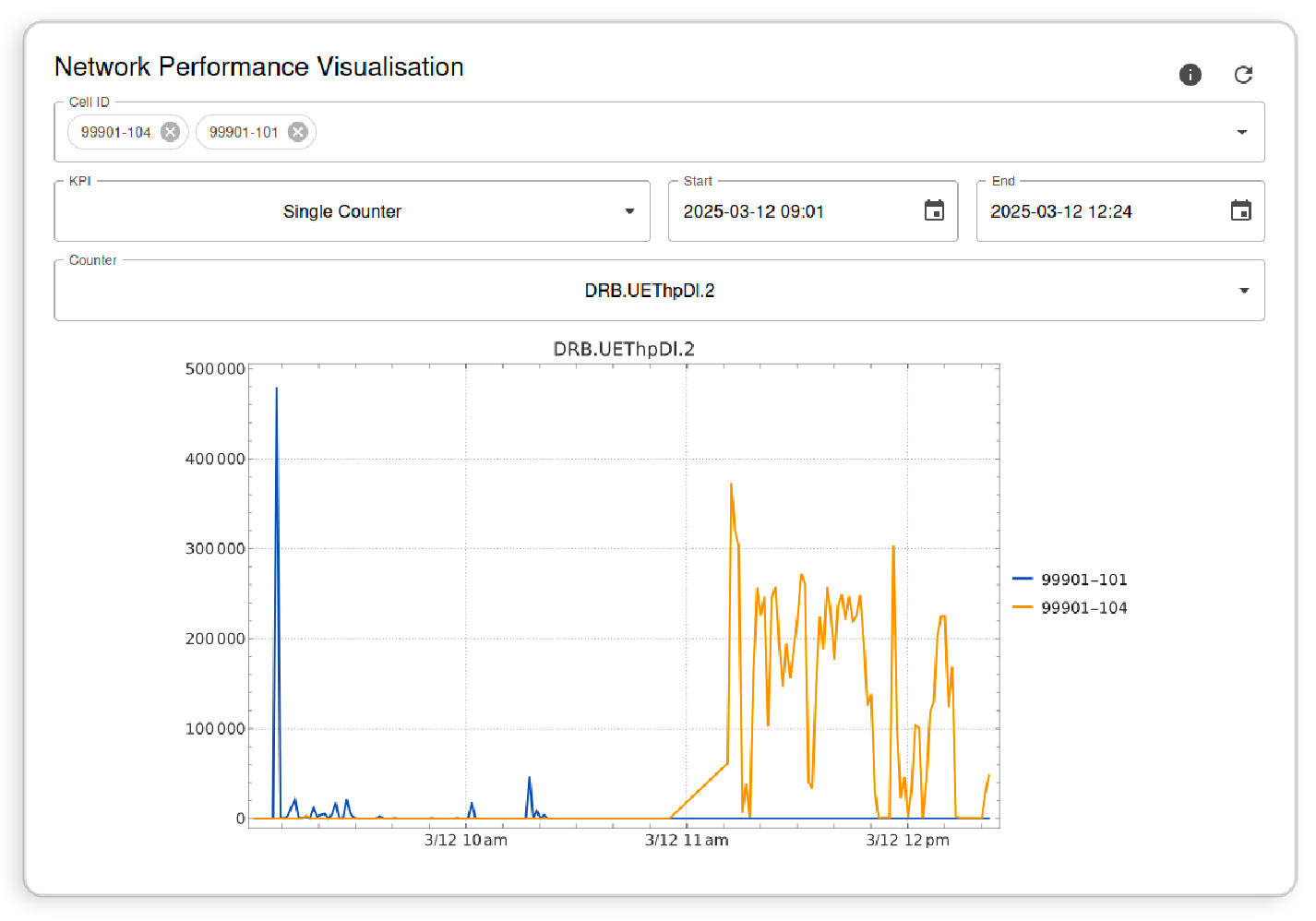

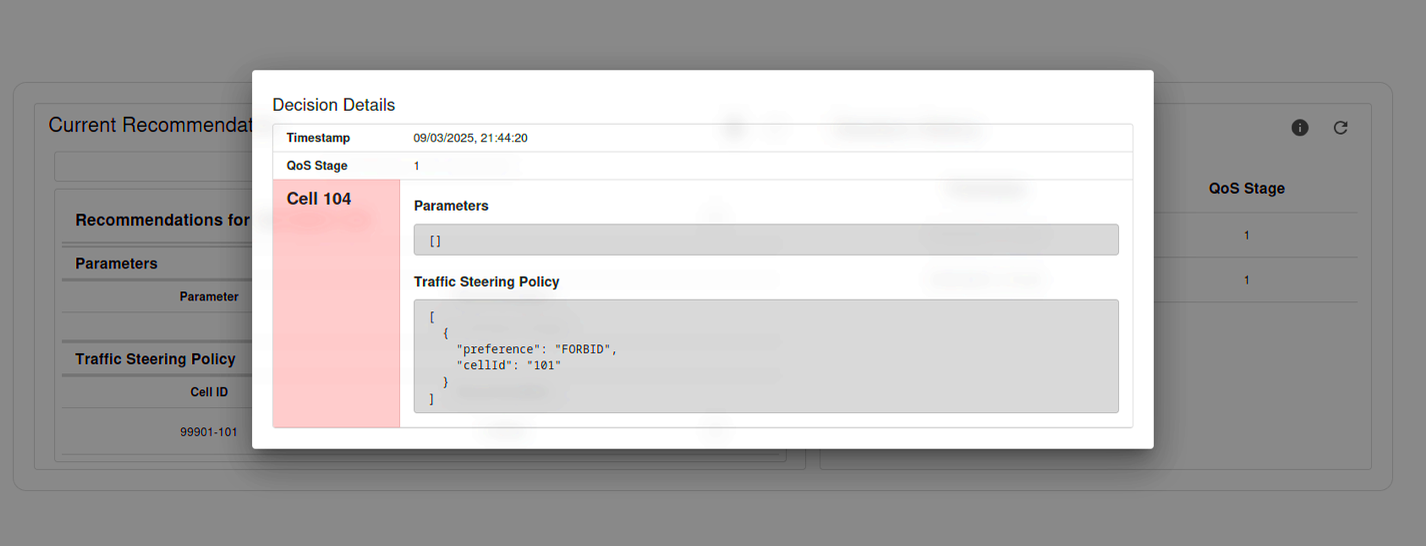

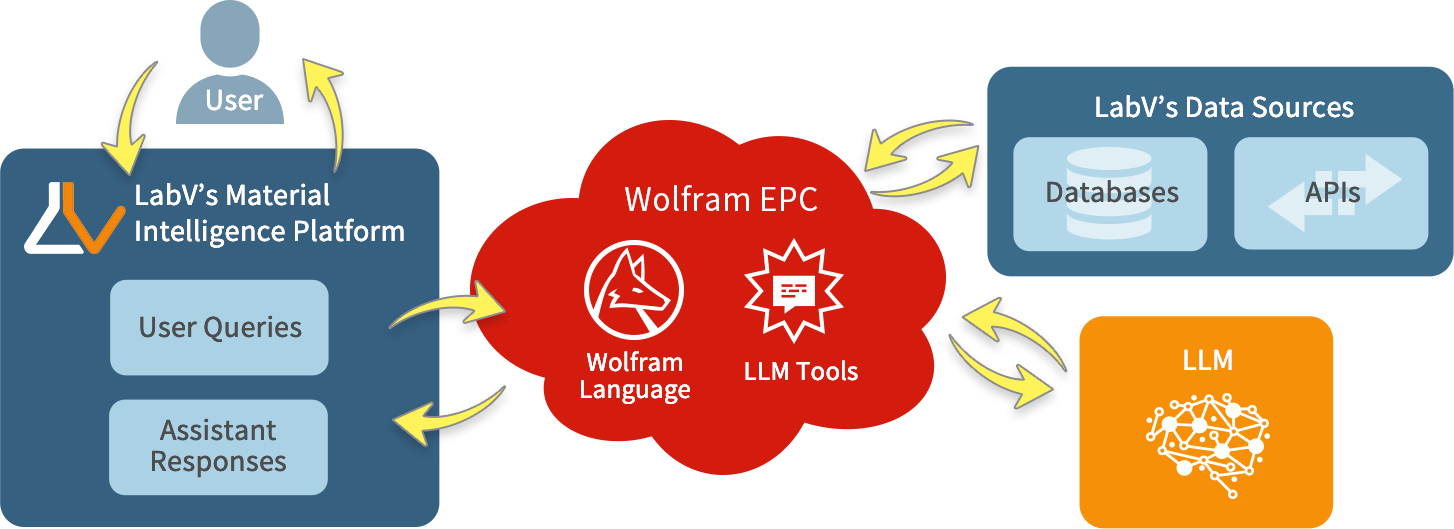

Once the data was structured, LabV and Wolfram Consulting built a digital assistant to work on top of it. The interface relies on a large language model to process natural language queries, while Wolfram’s back end handles the actual analysis—ensuring accuracy and preventing AI hallucinations. The assistant’s chat interface connects through Wolfram Enterprise Private Cloud, which coordinates LabV’s structured datasets, Wolfram’s computation results and the language model output. This architecture ensures every response comes from verified data and is computed with the same algorithms used in scientific and engineering applications.

The assistant isn’t built for show—it’s built to be used. LabV’s approach reflects best-practice machine learning workflows: data generation, preparation, model training, deployment and maintenance—keeping predictive insights accurate, explainable and current. Powered by Wolfram’s tech stack, a single prompt can return correlation tables, batch-level comparisons or supplier performance charts in seconds, using both historical and current data.

Real Results, Not Just Output

LabV replaced fragmented workflows with prompt-driven analysis powered by Wolfram’s back end. Batch issues that once went unnoticed were flagged in seconds. One manufacturer reported a 10% reduction in engineering hours by running fewer tests without sacrificing quality—time they could redirect toward faster development.

In coatings R&D, the assistant has identified optimal formulations for extreme environmental conditions by combining historical data with defined requirements, cutting development cycles and improving resource efficiency. Visual outputs improved supplier communication, and customized data handling reduced errors—helping the lab meet standards without extra head count or production delays.

Plus, the project’s six-week delivery window showed the team could move quickly without cutting corners. That speed, paired with Wolfram’s technical foundation, helped validate LabV’s approach and reinforced its credibility as a scalable platform for lab data oversight.

From Complexity to a Competitive Edge

LabV’s results were made possible by a system built to handle complexity from the start. Wolfram’s tech stack supports legacy data, real-time queries and evolving compliance needs. For leaders, that kind of scalability isn’t theoretical—it’s what makes automation sustainable under real-world demands.

Wolfram’s hybrid approach—combining a language model front end with a computational back end—delivers usable results without guesswork. Prompts return verifiable outputs, not vague summaries. Teams can surface patterns, outliers or points of interest in moments, interpret their significance and rapidly iterate through potential solutions—giving LabV clients an agility advantage in R&D and quality control.

By embedding a computational layer between the language model and the underlying data, Wolfram Consulting ensures AI output isn’t just plausible—it’s correct, explainable and backed by traceable sources. Since machine learning models return probabilities rather than certainties, having verifiable computation in the loop means every result can be trusted and acted upon. That’s why it’s not just about adding AI—it’s about making your data work for you.

When you’re ready to move beyond fragmented data, Wolfram Consulting can help you build the system that makes better decisions possible.