Radio Access Networks (RANs) form the essential bridge between mobile devices and a network’s core infrastructure. For 3G and 4G networks, mobile operators have traditionally relied on proprietary RAN systems—typically sourced from a single vendor—which can limit interoperability, raise long-term costs and slow adaptation to new technical demands.

In the shift to 5G, Wolfram has shown how AI-driven network optimisation and a deployable full-stack environment extend what Open RAN can deliver, giving developers direct access to built-in machine learning and advanced visualisation inside the network.

That vision of a more open and adaptable network underpins Wolfram’s work in the Cambridgeshire Open RAN Ecosystem (CORE) project. The initiative—one of 19 regional trials funded through the UK government’s Open Networks Ecosystem program—served as a live, multi-vendor testbed.

Wolfram’s role centred on building and deploying a predictive optimisation rApp inside this multi-vendor network, demonstrating how machine learning can guide real-time decisions and proving that the full Wolfram stack can operate natively in an Open RAN environment.

An Open Way Forward

As 5G networks evolve to support increasingly complex demands—from real-time media streaming to dense device connectivity—open, interoperable infrastructure offers a path toward more adaptive and cost-effective deployments. Countries like the United States and Japan have already begun scaling Open RAN technologies in live environments, while adoption in Europe is still in the early stages.

Wolfram collaborated with eight partners ranging from infrastructure providers to academic researchers to deploy a testbed Open RAN 5G network in Cambridgeshire. Unlike prior lab demonstrations, the CORE network operated across public venues and supported hardware and software from multiple vendors, demonstrating the viability of a modular, interoperable network at scale. In field testing, Wolfram’s predictive optimisation rApp helped the network sustain download speeds of 700 Mbps with latency under 10 milliseconds—performance levels proven during a live augmented-reality concert demo.

Wolfram’s role in this project centred on building an rApp within the RAN Intelligent Controller, showing how AI-driven insight can support real-time policy decisions without sacrificing explainability or control. Just as significant, the project proved that the full Wolfram technology stack can run natively inside an Open RAN environment, giving developers immediate access to advanced computation previously available only outside the network. As UK operators explore new architectures and supply-chain diversification, the CORE project stands as a concrete example of what’s possible—and what’s ready to scale.

Wolfram’s Network Optimisation rApp

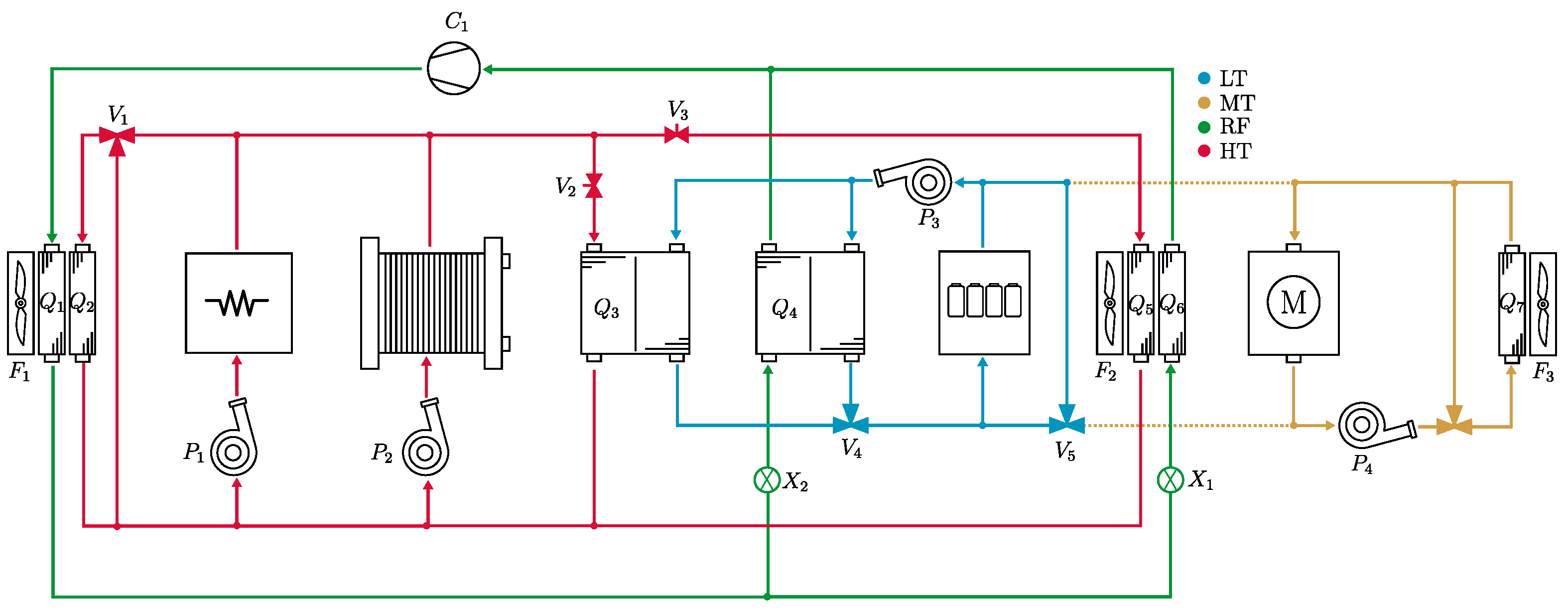

In the Open RAN architecture, rApps are standardised software applications that run in the non-real-time RAN Intelligent Controller (Non-RT RIC). Their role is to analyse historical and near-real-time network data to support intelligent traffic management, anomaly detection and policy optimisation—helping operators adapt to changing network conditions without manual intervention.

rApps are a core component of the O-RAN specification, and Wolfram developed a native Wolfram Language implementation for the CORE project. The result was a customised rApp that provided predictive analytics and explainable decision support within a multi-vendor environment. It also established a foundational Open RAN SDK to drive future data and AI-powered app development.

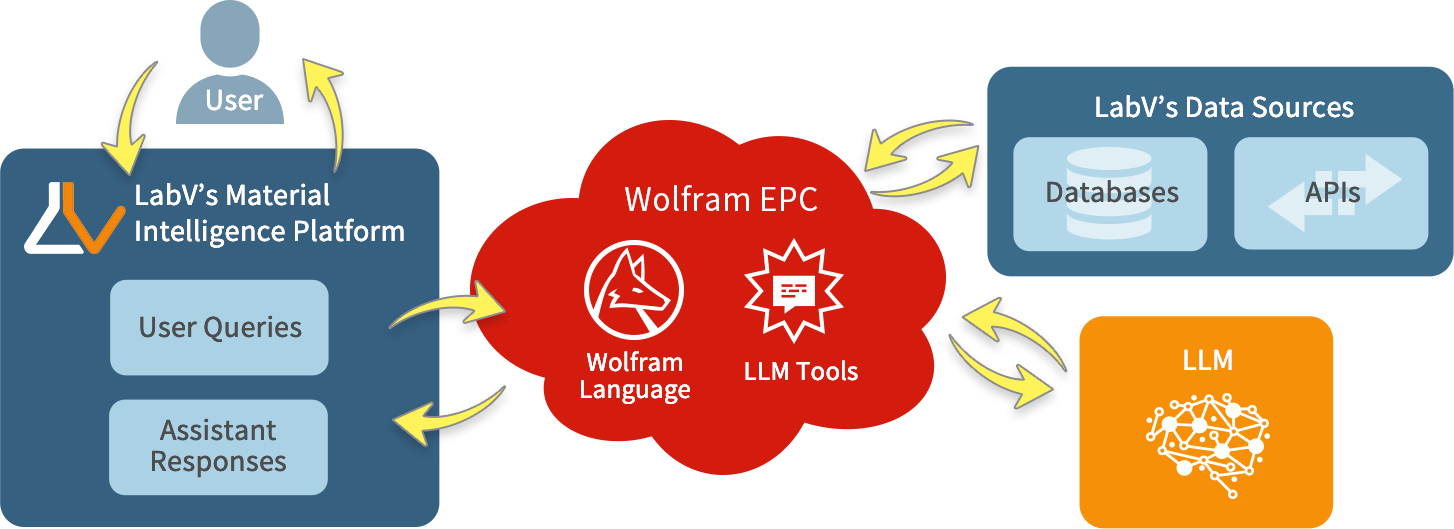

Wolfram’s Network Optimisation rApp is a full-stack implementation designed to support intelligent decision-making in Open RAN environments. Built using Wolfram Language, the system combines a back-end machine learning engine that analyses network data and predicts performance patterns with a front-end dashboard that visualises key metrics and delivers actionable recommendations to engineers.

Developed as part of the CORE project, the rApp was successfully deployed alongside partner hardware in a multi-vendor testbed. This deployment also confirmed that the entire Wolfram technology stack could operate natively within the RAN environment, giving developers direct access to advanced computation and machine learning without external integration.

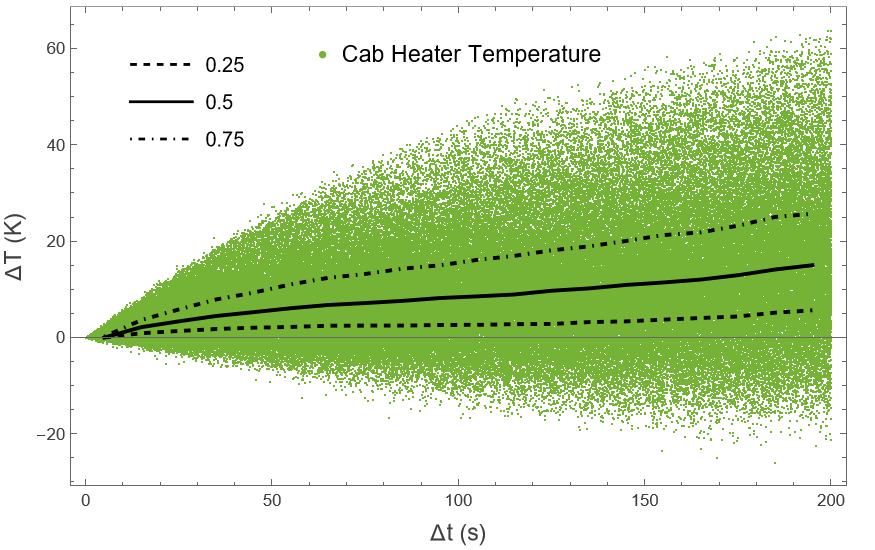

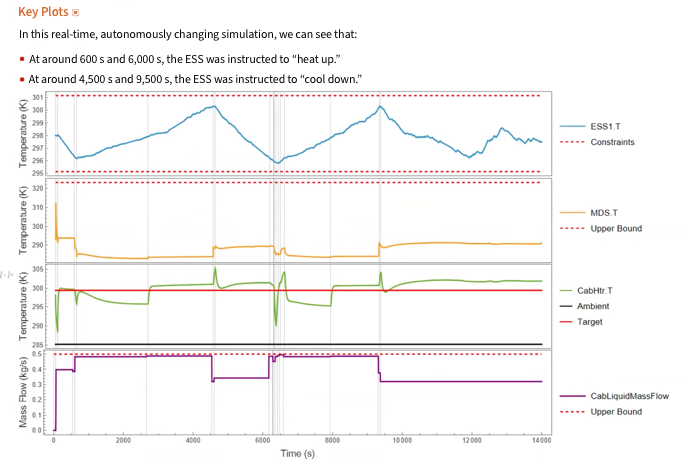

The back end of Wolfram’s rApp processes live network performance data to support intelligent optimisation. It ingests key performance indicators (KPIs) such as throughput, latency, signal strength and packet loss—core metrics used to assess the health and efficiency of a 5G network. Using these inputs, the machine learning model identifies patterns in traffic behavior, detects anomalies and generates recommendations aimed at improving network performance. These outputs are designed to help operators proactively manage traffic flow and resource allocation across the network. While retraining frequency depends on deployment parameters, the model is intended to operate continuously in tandem with incoming telemetry data.

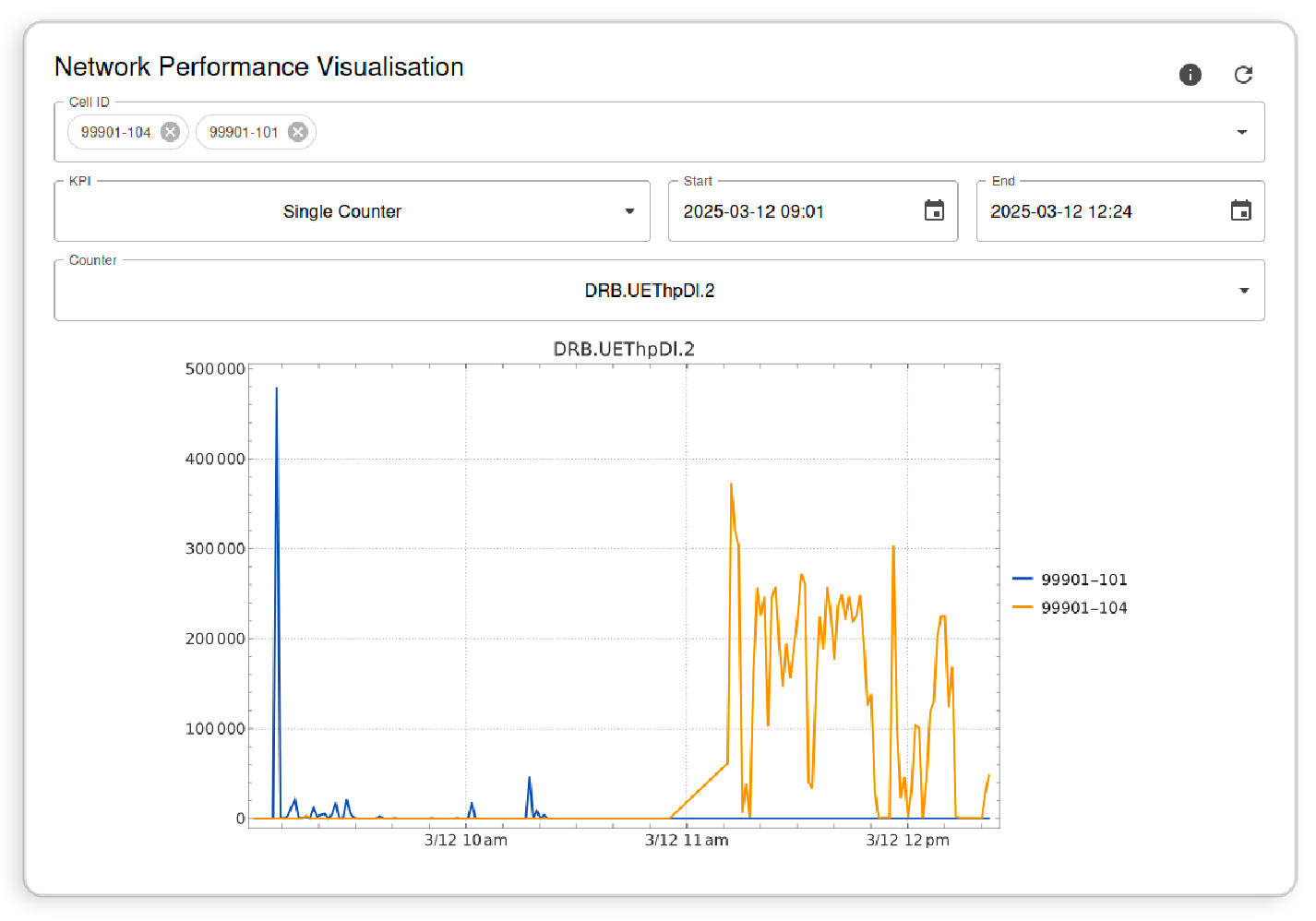

The front-end dashboard of Wolfram’s rApp provides a visual interface for monitoring network performance in real time. Engineers can view KPIs such as throughput and signal strength using standard visualisations, with options to compare multiple data streams or focus on specific time windows. Users have control over which metrics are displayed and can adjust the timeframe to analyse short-term fluctuations or longer-term trends.

One view, for example, displays throughput across two adjacent cells, offering a side-by-side comparison that helps operators quickly identify load imbalances or performance deviations. This interface supports decision-making by making underlying patterns immediately visible and aligning visualisations with actionable insights generated by the back end.

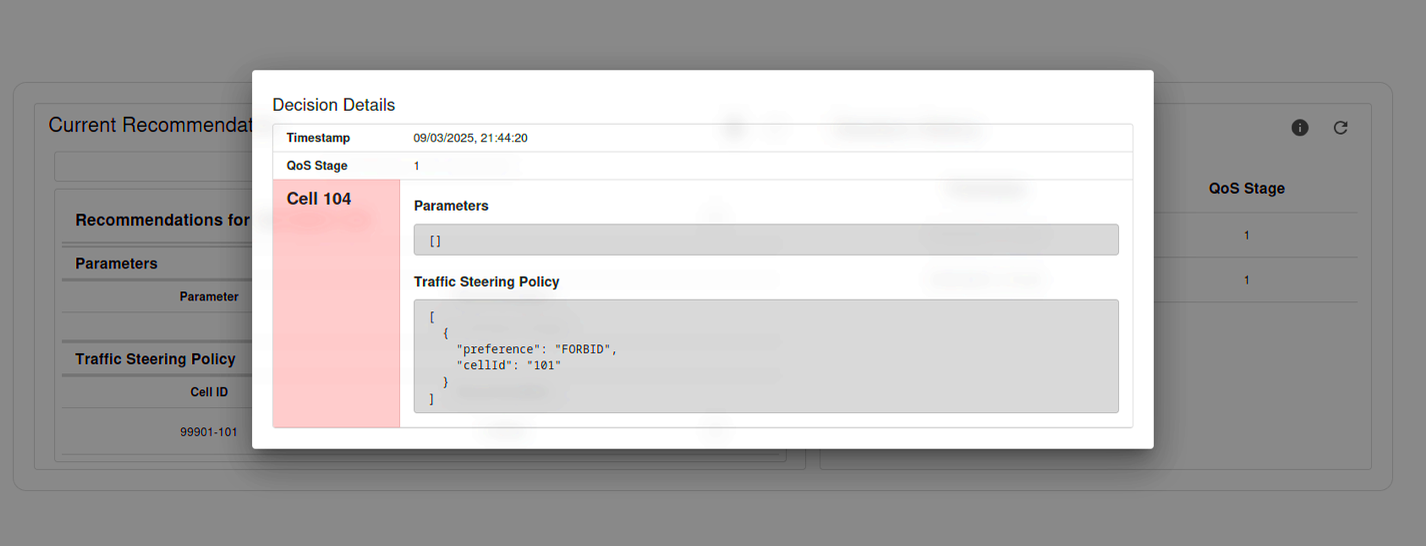

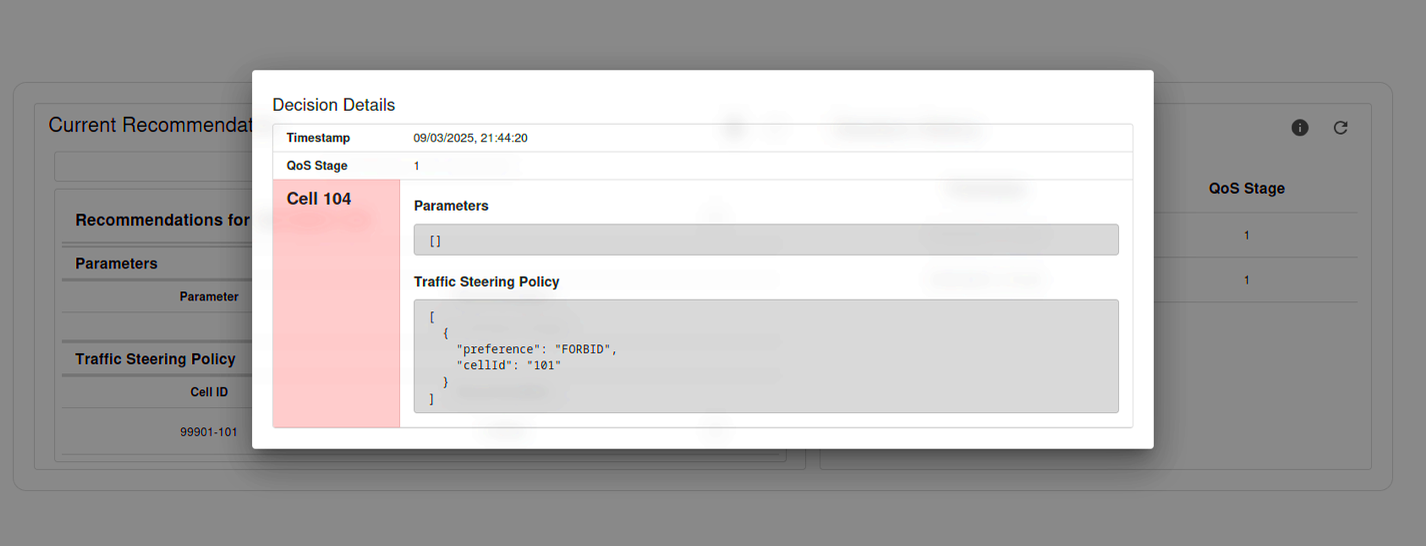

The rApp’s recommendation system translates back-end analysis into actionable suggestions for network engineers. When the model identifies a configuration that could improve performance—such as activating additional cells to handle rising traffic—it surfaces a recommendation through the dashboard interface. These suggestions are presented alongside relevant context and supporting metrics but are not applied automatically; engineers remain responsible for reviewing and approving any changes. Here is an example recommendation detailing a traffic-steering policy for a specific cell based on predicted demand.

“Cellular network engineers would traditionally spend hours manually analysing and optimising a network’s performance. The O-RAN framework, enhanced by Wolfram’s rApp, transforms this paradigm completely. It not only reduces intervention time from hours to mere seconds, but also enables proactive, real-time network optimisation based on actual usage patterns. This represents a fundamental shift from manual troubleshooting to intelligent, automated network management.”

—Tony Aristeidou, Lead Developer of Wolfram’s rApp

The Promise of Open 5G: Higher Speeds, Exciting Applications

Tests conducted during the CORE project trial in Cambridgeshire demonstrated that the open 5G network consistently achieved download speeds exceeding 700 Mbps with sub-10-millisecond latency and 100% uptime during live operation. These results were obtained using commercially available devices under typical deployment conditions, not lab-simulated scenarios.

Performance was well above the ITU’s recommended 5G benchmark of 100 Mbps for user-experienced throughput and outperformed trial speeds reported in similar UK Open RAN pilots, such as Three UK’s 520 Mbps deployment in Glasgow—confirming that Open RAN configurations can deliver production-grade connectivity in high-demand settings.

To evaluate network performance under immersive, high-bandwidth conditions, the CORE consortium organised a public augmented-reality concert trial. A live performance at the Cambridge Corn Exchange was streamed in real time to a second location, where participants wearing Meta Quest and Apple Vision Pro headsets viewed the show as spatial 3D video with synchronised audio.

The feed—captured using custom 8K 3D cameras and streamed over the open 5G network—was delivered with less than 1.5 seconds of latency and maintained flawless audio-visual sync across multiple headsets. This setup provided a realistic stress test of the network’s capacity to handle sustained, low-latency, multi-device loads in a public setting.

The successful execution of the augmented-reality concert trial underscores the potential of Open RAN to support next-generation applications that demand both high throughput and ultra-low latency. It also validated the ability of a multi-vendor, standards-compliant architecture to deliver consistent performance under real-world load conditions.

While this trial was limited in geographic scope, the results provide a working proof of concept for scalable, interoperable network design. By adding software-defined intelligence through Wolfram’s rApp, the project showed that intelligent optimisation and real-time responsiveness can coexist—a model for broader adoption of adaptive 5G deployments across the UK.

“By investigating an O-RAN neutral host solution, the network will be able to support multiple mobile operators over a single site. This will encourage mobile network supply chain diversification—reducing costs for deploying and operating a network, and opening up business opportunities in the O-RAN ecosystem.”

— Michael Stevens, Connecting Cambridgeshire’s Strategy & Partnership Manager

Ready to Build Smarter Networks?

The CORE Project proved two things: that Wolfram’s full technology stack can run natively inside an Open RAN environment—powering rApps, dashboards and machine learning–driven optimisation—and that Wolfram can deliver those results while working closely with industry partners and government-led initiatives. Together, these capabilities position Wolfram Consulting as a partner ready to help operators and vendors turn network data into real-time intelligence and adaptive 5G solutions.

Contact Wolfram Consulting to put the full Wolfram tech stack to work inside Open RAN—delivering predictive optimisation and actionable insights out of the box.