We deliver solutions for the AI era—combining symbolic computation, data-driven insights and deep technical expertise

From the creators of Mathematica and Wolfram|Alpha

Beyond the Hype: Providing Computational Superpowers for Enterprise AI

Insights (8)

Sure, it was laughable when X’s AI chatbot Grok accused NBA star Klay Thompson of a vandalism spree after users described him as “shooting bricks” during a recent game, but it was no joke when iTutorGroup paid $365,000 to job applicants rejected by its AI in a first-of-its-kind bias case. On a larger scale, multiple healthcare companies—including UnitedHealth Group, Cigna Healthcare and Humana—face class-action lawsuits based on their AI algorithms that are alleged to have improperly denied hundreds of thousand of patient claims.

So, while AI—driven by large language models (LLMs)—has emerged as a groundbreaking innovation for streamlining workflows, its current limitations are becoming more apparent, including inaccurate responses and weaknesses in logical and mathematical reasoning.

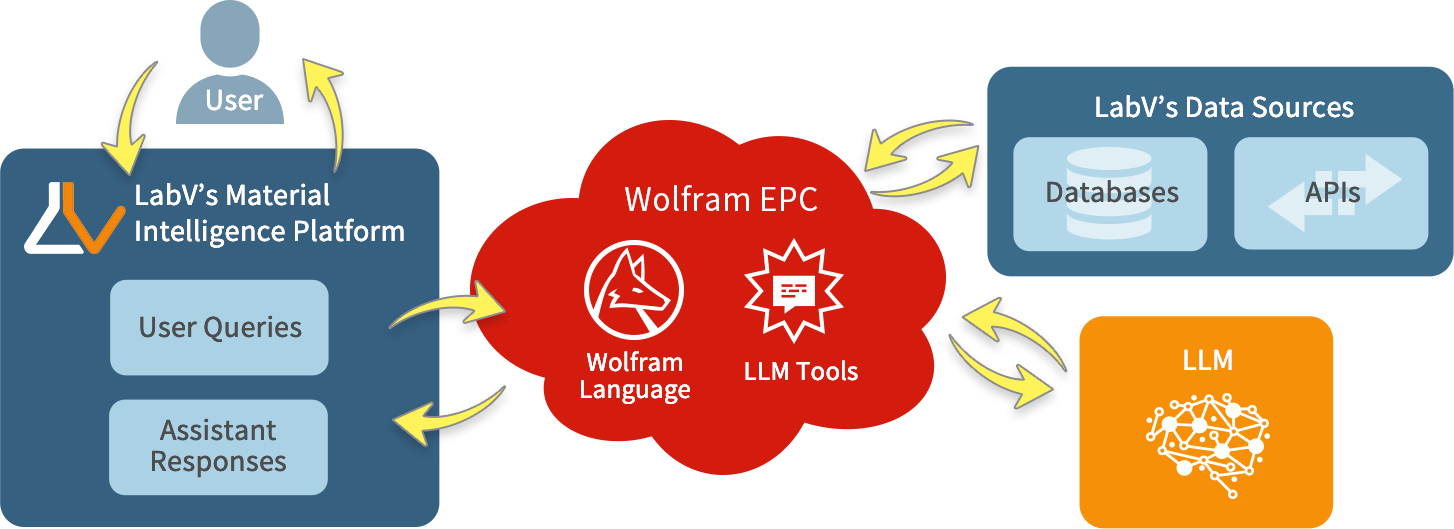

To address these challenges, Wolfram Research has developed a suite of tools and technologies to enhance the capabilities of LLMs. Wolfram’s technology stack, including the Wolfram Enterprise Private Cloud (EPC) and Wolfram|Alpha, increases the productivity of AI applications in multiple enterprise environments. By leveraging Wolfram’s extensive experience in computational intelligence and data curation, organizations can overcome LLM limitations to achieve greater accuracy and efficiency in AI-driven workflows.

At the same time, Wolfram Consulting Group is not confined to one specific LLM. Instead, we can enhance the capabilities of any sophisticated LLM that utilizes tools and writes computer code, including OpenAI’s GPT-4 (where Wolfram GPT is now available), Anthropic’s Claude 3 and Google’s Gemini Pro. We can also incorporate these tools in a privately hosted LLM within your infrastructure or via public LLM services.

Wolfram’s Integrated Technology Stack

Wolfram has a well-developed tech stack available to modern LLMs: data science tools, machine learning algorithms and visualizations. It also allows the LLM to write code to access your various data sources and store intermediate results in cloud memory, without consuming LLM context-window bandwidth. The Wolfram Language evaluation engine provides correct and deterministic results in complex computational areas where an unassisted LLM would tend to hallucinate.

When your organization is equipped with the Wolfram technology stack for tool-assisted AIs, the productivity of your existing experts is enhanced with methods that support exploratory data analysis, machine learning, data science, instant reporting and more:

- The LLM can interpret expert user instructions to generate Wolfram code and tool requests performing a wide variety of computational tasks, with instant feedback and expert verification of the intermediate results.

- Custom tools for accessing corporate/proprietary structured and unstructured data, models and digital twins, and business logic feed problems to the Wolfram Language algorithms implementing your analytic workflows.

- Working sessions create a documented workflow of thought processes, prompts, tool use and code that can be reused on future problems or reviewed for audit purposes.

Designed for system integration flexibility, use the platform as a fully integrated system or as a component in an existing one. In the full-system integration, the Wolfram tech stack seamlessly manages all communications between the LLM and other system components. Alternatively, use it as a set of callable tools integrated into your existing LLM stack as our modular and extensible design readily adapts to your changing needs. Also access the integrated Wolfram tech stack through a variety of user interfaces, including a traditional chat experience, a custom Wolfram Chat Notebook, REST APIs and other web-deployed custom user interfaces.

Wolfram Enterprise Private Cloud (EPC)

Wolfram’s EPC serves as a private, centralized hub for accessing Wolfram’s collection of LLM tools and works in commercial cloud environments such as Microsoft Azure, Amazon Web Services (AWS) and Google Cloud. For organizations preferring in-house solutions, EPC can also operate on dedicated hardware within your data center.

Once deployed, EPC can connect to various structured and unstructured data sources. These include SQL databases, graph databases, vector databases and even expansive data lakes. Applications deployed on EPC are accessible via instant web service APIs or through web-deployed user interfaces, including Chat Notebooks. As Wolfram continues to innovate, the capabilities of EPC also grow.

Wolfram|Alpha Infrastructure

Wolfram|Alpha can also be a valuable asset for your suite of tools. With a vast database of curated data across diverse realms of human knowledge, Wolfram|Alpha can augment your existing resources.

Top-tier intelligent assistants, websites, knowledge-based apps and various partners have trusted Wolfram|Alpha APIs for over a decade. These APIs have answered billions of queries across hundreds of knowledge domains. Designed for use by LLMs, Wolfram|Alpha’s public LLM-specific API endpoint is tailored to enable smooth communication and data consumption.

If your LLM platform requires a customized version of Wolfram|Alpha, our sales and engineering teams will work with you to optimize your access to its extensive capabilities. This ensures that you have the right setup to harness the full potential of Wolfram|Alpha in your specific context.

Preparing Knowledge for Computation

While many platforms give an LLM access to data retrieval tools, what sets Wolfram apart is extensive experience in preparing knowledge for computation. For over a decade, Wolfram has provided knowledge curation services and custom versions of Wolfram|Alpha to diverse industries and government institutions with sophisticated data curation workflows and exposed ontologies and schemas to AI systems. Direct access to vast amounts of data alone is not enough; an LLM requires context for data and an understanding of the user’s intent.

Wolfram consultants can establish workflows and services to equip your team with tools for programmatic data curation through an LLM. This process involves creating a list of questions and identifying the subjects or entities to which these questions apply. The LLM, with the aid of the appropriate retrieval tools, then finds the answers and cites its sources. These workflows alleviate the workload of extensive curation tasks, and the enhanced curation capabilities then operate within the EPC infrastructure.

At the same time, you’ll retain ownership of any intellectual property created for your funded project, including custom plugins or tools Wolfram develops, ensuring you have full control over the solutions created for your organization.

Enterprise AI the Wolfram Way

When you decide you need a custom LLM solution, let Wolfram Consulting Group build one tailored to your specific needs. From developing runtime environments that help your teams integrate Wolfram technology into existing platforms to creating application architecture, preparing data for computation and performing modeling and digital twin implementation, Wolfram has the unique experience across all areas of computation for the right balance of approaches to achieve optimal results.

By working with Wolfram, you get the best people and the best tools to keep up with developments in the rapidly changing AI landscape. The result? You will capture the full potential of the new generation of LLMs.

Contact Wolfram Consulting Group to learn more about using Wolfram’s tech stack and LLM tools to generate actionable business intelligence.

Advancing Electric Truck Simulation with Wolfram Tools: Wolfram Consulting Group

Client Results (11)

Heavy-duty electric trucks don’t have the luxury of wasted heat. Every watt must either be stored or redirected, which makes thermal control one of the hardest parts of vehicle design. For a global manufacturer of commercial freight vehicles developing its next electric platform, simulation speed became the barrier. Each test cycle ran for hours, slowing every decision about how to warm the cabin and protect the batteries.

Wolfram Consulting replaced that process with a unified computational model and a neural network able to predict and correct thermal behavior in real time. What once ran overnight now runs in seconds.

The Challenge

Designing the thermal system of a heavy-duty electric truck means balancing efficiency with durability and driver comfort. The job demands precise control of temperature across batteries, motors and cabin spaces, while keeping energy use as low as possible.

At the start of development, separate engineering groups produced their own heat data for a four-hour reference route between company facilities. The battery team modeled pack heating, the driveline group estimated motor losses, and the cabin group focused on air-handling loads. The thermal group then merged these datasets by hand in spreadsheets to approximate whole-system behavior.

Detailed GT-Suite runs that took hours were reproduced in tens of seconds in System Modeler. For closed-loop control experiments that adjust valves/pumps on the fly, the team identified a real-time simulation constraint that required running at 1×, which is why those validation runs were executed overnight.

The Solution

Wolfram Consulting delivered a two-step solution: first, a high-fidelity System Modeler framework that replaced manual thermal analysis, and second, a neural network trained on those simulations to predict and optimize real-time performance.

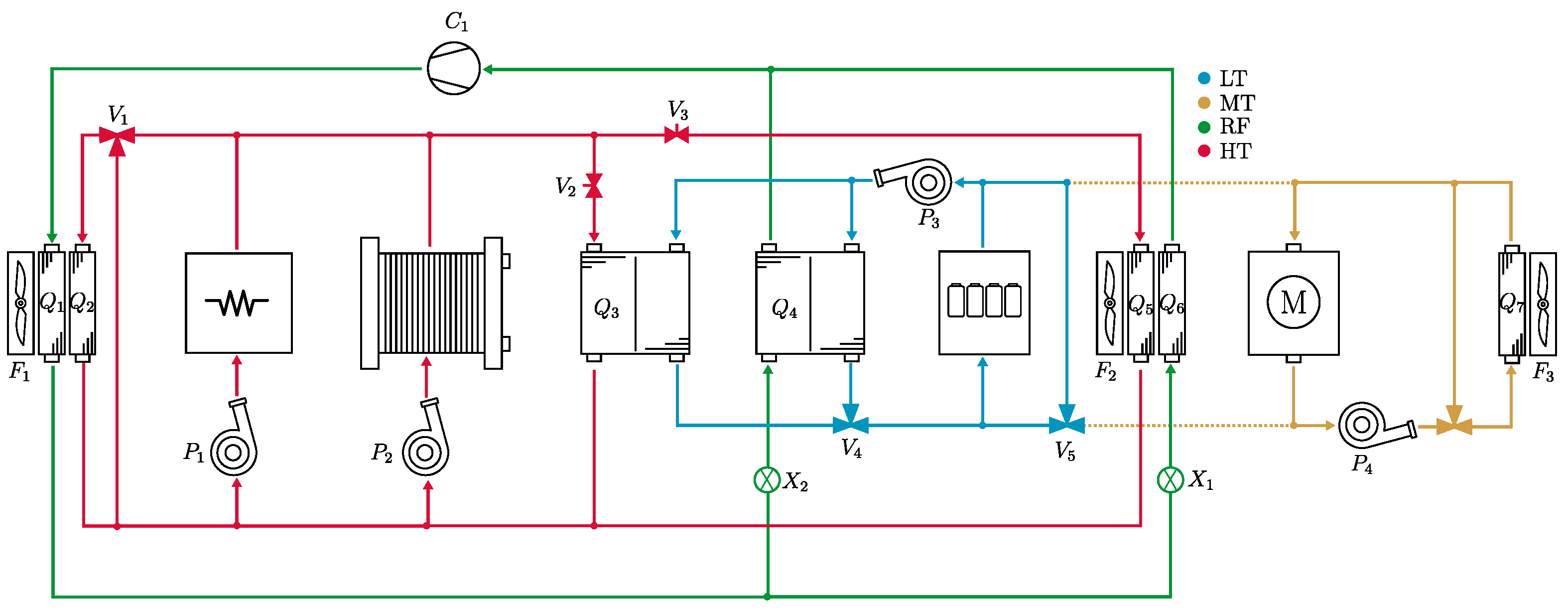

The team replaced static spreadsheets with a computable model of the truck’s thermal circuit, similar to the one shown here. Engineers built a two-tier System Modeler library for early exploration and for detailed dynamics. The first tier used simplified components with basic mass-flow inputs, so engineers could visualize and test circuit concepts before detailed data existed. The second tier added hydraulic flow and pressure behavior, including pipe diameters, pump curves and coolant properties that vary with temperature and pressure.

These models produced results consistent with GT-Suite in a fraction of the time. A full run of the same route that once took three to four hours could be completed in about 35 seconds. The library enabled rapid iteration and reuse across projects, giving the thermal group a practical foundation that matched operating conditions.

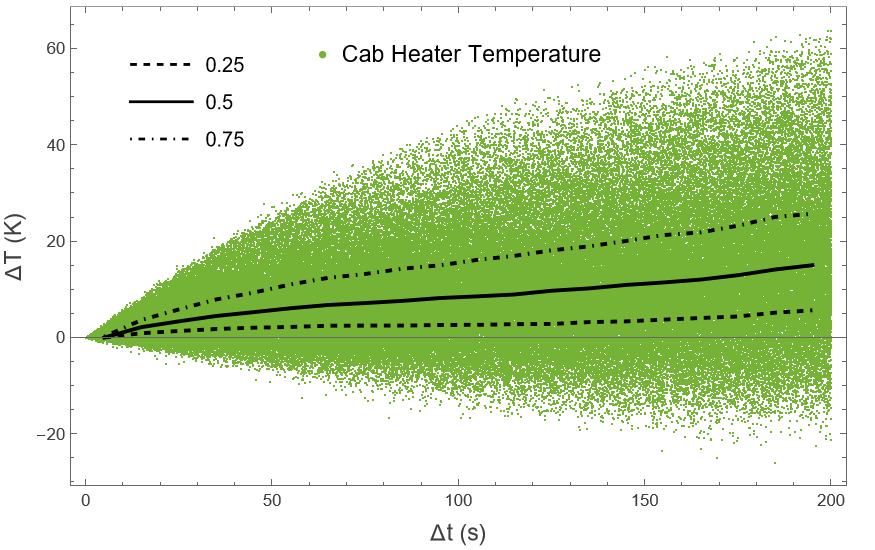

Once validated, the team generated steady-state simulations spanning valve positions and pump speeds under varied operating conditions. The resulting data trained a neural network that learned how each configuration affected the battery systems and the cabin. It could predict future temperatures and, more importantly, compute the control settings needed to hit a target.

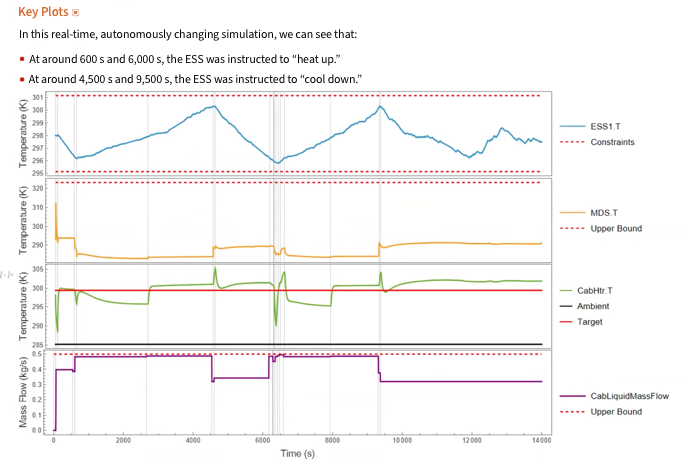

This invertible approach made the network an adaptive control layer. During a full cycle, the system adjusted parameters in real time to avoid under- or over-temperature events. When battery temperature fell below range, the controller identified the precise valve and pump changes needed to recover. It could also ease compressor load when limits were stable.

Together, the simulation framework and control layer created a self-correcting thermal system that improved speed and accuracy.

The Results

The framework delivered immediate gains. Engineers moved from multi-hour runs to seconds-scale iteration, testing new circuit layouts and control strategies that had been impossible to study before.

The adaptive control layer turned static calibration into a data-driven process. Instead of tuning modes by hand, the system maintained target ranges automatically during validation, adjusting pump speeds and valve positions to keep all components within their limits.

The libraries are now used beyond the initial program. The battery group is modeling internal heat generation, and the controls team has integrated the thermal model through FMI for co-simulation. Working from the same computable foundation, teams report faster decisions and more consistent results.

Building a Scalable Modeling Standard

By replacing a slow, siloed workflow with a unified computational framework, Wolfram Consulting turned thermal modeling from a bottleneck into a continuous design process. What began as a single EV program has become a shared modeling standard across engineering groups and now extends to electrical and control work.

When you need to speed up simulations or optimize system performance, Wolfram Consulting can help you build models that deliver faster, more reliable results.

Solving the Data Bottleneck: LabV’s Six-Week Path to Real-Time Insight and Quality Control

Client Results (11)

Labs generate more data than they can easily use. The result? Manual workflows and fragmented tools slow down decisions. LabV, a lab data management company, decided to design a system that makes it faster to identify issues and communicate them clearly—both internally and externally.

LabV worked with Wolfram Consulting to build a digital assistant for real-time lab data analysis. A single prompt now surfaces trends that once took hours to uncover. In one case, it revealed a correlation between density and heat resistance—guiding product decisions without manual analysis or coding. This made LabV one of the first AI-powered solutions for material testing labs, combining AI’s fluency with a computational foundation so every output is both fast and verifiable.

The Prerequisites for Smarter Analysis

Meeting regulatory standards requires collecting and analyzing hundreds of data points across batches and suppliers. But LabV’s instruments weren’t fully connected to its laboratory information management system (LIMS), and much of the data still lived in spreadsheets. Even routine retrieval was slow. Deeper comparisons—like tracking material behavior by supplier—were often skipped entirely.

While generative AI tools were readily available, they couldn’t access LabV’s proprietary datasets or guarantee accurate answers. Without a way to ground responses in the lab’s own data, AI risked producing results that looked plausible but couldn’t be trusted. Research shows that disorganized, siloed data delays batch approvals, extends development cycles and drives up costs by consuming engineering hours in redundant work.

More fundamentally, the fragmented system made automation impossible. Traditional LIMS platforms weren’t designed for complex data integration or analysis at scale. To move forward, LabV had to build a centralized, structured dataset—one capable of supporting real-time decisions and enabling smarter tools. Without that foundation, AI was just out of reach.

Making Data Usable at Scale

LabV couldn’t move forward until it fixed its data layer. Traditional LIMS systems aren’t built to connect every instrument or consolidate outputs. Wolfram helped create a unified framework where test results could be collected, structured and searched—laying the groundwork for automation and eventual AI-powered analysis.

Once the data was structured, LabV and Wolfram Consulting built a digital assistant to work on top of it. The interface relies on a large language model to process natural language queries, while Wolfram’s back end handles the actual analysis—ensuring accuracy and preventing AI hallucinations. The assistant’s chat interface connects through Wolfram Enterprise Private Cloud, which coordinates LabV’s structured datasets, Wolfram’s computation results and the language model output. This architecture ensures every response comes from verified data and is computed with the same algorithms used in scientific and engineering applications.

The assistant isn’t built for show—it’s built to be used. LabV’s approach reflects best-practice machine learning workflows: data generation, preparation, model training, deployment and maintenance—keeping predictive insights accurate, explainable and current. Powered by Wolfram’s tech stack, a single prompt can return correlation tables, batch-level comparisons or supplier performance charts in seconds, using both historical and current data.

Real Results, Not Just Output

LabV replaced fragmented workflows with prompt-driven analysis powered by Wolfram’s back end. Batch issues that once went unnoticed were flagged in seconds. One manufacturer reported a 10% reduction in engineering hours by running fewer tests without sacrificing quality—time they could redirect toward faster development.

In coatings R&D, the assistant has identified optimal formulations for extreme environmental conditions by combining historical data with defined requirements, cutting development cycles and improving resource efficiency. Visual outputs improved supplier communication, and customized data handling reduced errors—helping the lab meet standards without extra head count or production delays.

Plus, the project’s six-week delivery window showed the team could move quickly without cutting corners. That speed, paired with Wolfram’s technical foundation, helped validate LabV’s approach and reinforced its credibility as a scalable platform for lab data oversight.

From Complexity to a Competitive Edge

LabV’s results were made possible by a system built to handle complexity from the start. Wolfram’s tech stack supports legacy data, real-time queries and evolving compliance needs. For leaders, that kind of scalability isn’t theoretical—it’s what makes automation sustainable under real-world demands.

Wolfram’s hybrid approach—combining a language model front end with a computational back end—delivers usable results without guesswork. Prompts return verifiable outputs, not vague summaries. Teams can surface patterns, outliers or points of interest in moments, interpret their significance and rapidly iterate through potential solutions—giving LabV clients an agility advantage in R&D and quality control.

By embedding a computational layer between the language model and the underlying data, Wolfram Consulting ensures AI output isn’t just plausible—it’s correct, explainable and backed by traceable sources. Since machine learning models return probabilities rather than certainties, having verifiable computation in the loop means every result can be trusted and acted upon. That’s why it’s not just about adding AI—it’s about making your data work for you.

When you’re ready to move beyond fragmented data, Wolfram Consulting can help you build the system that makes better decisions possible.

The CORE Project: Bringing Open 5G to Cambridgeshire, UK, and Beyond

Client Results (11)

Radio Access Networks (RANs) form the essential bridge between mobile devices and a network’s core infrastructure. For 3G and 4G networks, mobile operators have traditionally relied on proprietary RAN systems—typically sourced from a single vendor—which can limit interoperability, raise long-term costs and slow adaptation to new technical demands.

In the shift to 5G, Wolfram has shown how AI-driven network optimisation and a deployable full-stack environment extend what Open RAN can deliver, giving developers direct access to built-in machine learning and advanced visualisation inside the network.

That vision of a more open and adaptable network underpins Wolfram’s work in the Cambridgeshire Open RAN Ecosystem (CORE) project. The initiative—one of 19 regional trials funded through the UK government’s Open Networks Ecosystem program—served as a live, multi-vendor testbed.

Wolfram’s role centred on building and deploying a predictive optimisation rApp inside this multi-vendor network, demonstrating how machine learning can guide real-time decisions and proving that the full Wolfram stack can operate natively in an Open RAN environment.

An Open Way Forward

As 5G networks evolve to support increasingly complex demands—from real-time media streaming to dense device connectivity—open, interoperable infrastructure offers a path toward more adaptive and cost-effective deployments. Countries like the United States and Japan have already begun scaling Open RAN technologies in live environments, while adoption in Europe is still in the early stages.

Wolfram collaborated with eight partners ranging from infrastructure providers to academic researchers to deploy a testbed Open RAN 5G network in Cambridgeshire. Unlike prior lab demonstrations, the CORE network operated across public venues and supported hardware and software from multiple vendors, demonstrating the viability of a modular, interoperable network at scale. In field testing, Wolfram’s predictive optimisation rApp helped the network sustain download speeds of 700 Mbps with latency under 10 milliseconds—performance levels proven during a live augmented-reality concert demo.

Wolfram’s role in this project centred on building an rApp within the RAN Intelligent Controller, showing how AI-driven insight can support real-time policy decisions without sacrificing explainability or control. Just as significant, the project proved that the full Wolfram technology stack can run natively inside an Open RAN environment, giving developers immediate access to advanced computation previously available only outside the network. As UK operators explore new architectures and supply-chain diversification, the CORE project stands as a concrete example of what’s possible—and what’s ready to scale.

Wolfram’s Network Optimisation rApp

In the Open RAN architecture, rApps are standardised software applications that run in the non-real-time RAN Intelligent Controller (Non-RT RIC). Their role is to analyse historical and near-real-time network data to support intelligent traffic management, anomaly detection and policy optimisation—helping operators adapt to changing network conditions without manual intervention.

rApps are a core component of the O-RAN specification, and Wolfram developed a native Wolfram Language implementation for the CORE project. The result was a customised rApp that provided predictive analytics and explainable decision support within a multi-vendor environment. It also established a foundational Open RAN SDK to drive future data and AI-powered app development.

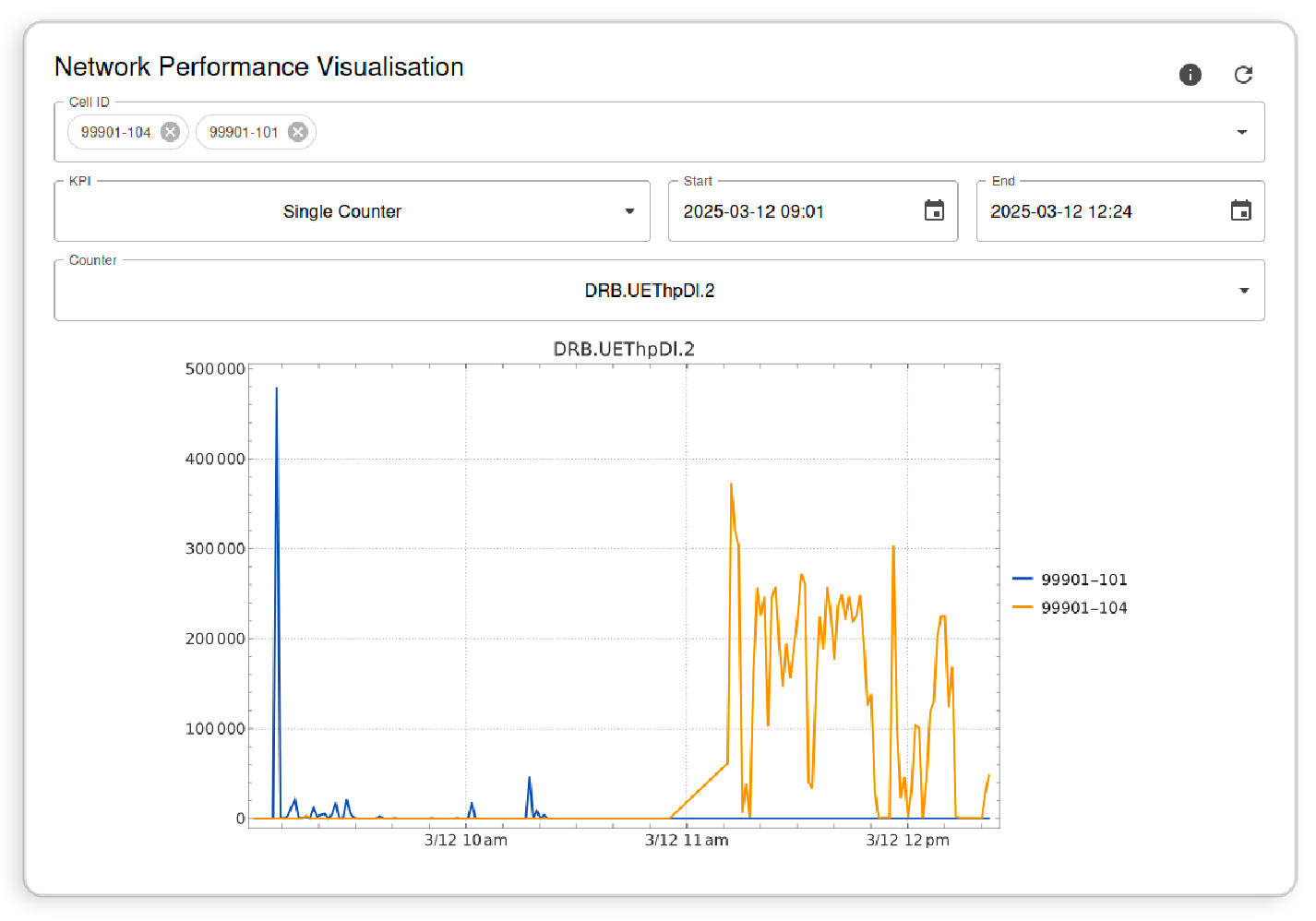

Wolfram’s Network Optimisation rApp is a full-stack implementation designed to support intelligent decision-making in Open RAN environments. Built using Wolfram Language, the system combines a back-end machine learning engine that analyses network data and predicts performance patterns with a front-end dashboard that visualises key metrics and delivers actionable recommendations to engineers.

Developed as part of the CORE project, the rApp was successfully deployed alongside partner hardware in a multi-vendor testbed. This deployment also confirmed that the entire Wolfram technology stack could operate natively within the RAN environment, giving developers direct access to advanced computation and machine learning without external integration.

The back end of Wolfram’s rApp processes live network performance data to support intelligent optimisation. It ingests key performance indicators (KPIs) such as throughput, latency, signal strength and packet loss—core metrics used to assess the health and efficiency of a 5G network. Using these inputs, the machine learning model identifies patterns in traffic behavior, detects anomalies and generates recommendations aimed at improving network performance. These outputs are designed to help operators proactively manage traffic flow and resource allocation across the network. While retraining frequency depends on deployment parameters, the model is intended to operate continuously in tandem with incoming telemetry data.

The front-end dashboard of Wolfram’s rApp provides a visual interface for monitoring network performance in real time. Engineers can view KPIs such as throughput and signal strength using standard visualisations, with options to compare multiple data streams or focus on specific time windows. Users have control over which metrics are displayed and can adjust the timeframe to analyse short-term fluctuations or longer-term trends.

One view, for example, displays throughput across two adjacent cells, offering a side-by-side comparison that helps operators quickly identify load imbalances or performance deviations. This interface supports decision-making by making underlying patterns immediately visible and aligning visualisations with actionable insights generated by the back end.

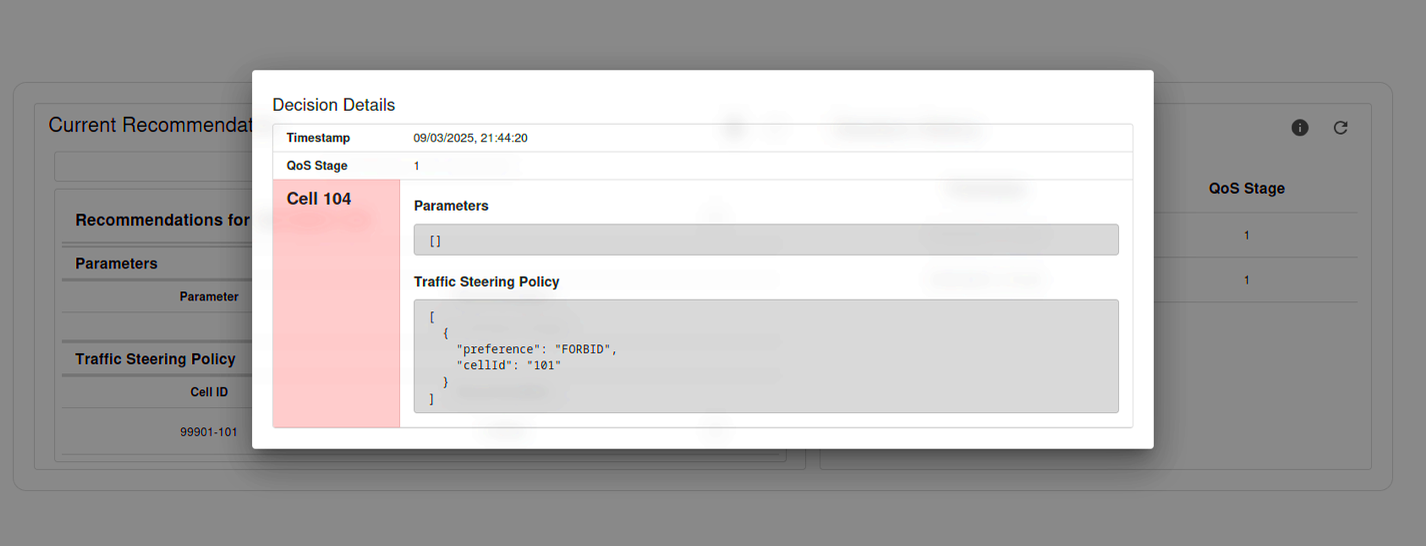

The rApp’s recommendation system translates back-end analysis into actionable suggestions for network engineers. When the model identifies a configuration that could improve performance—such as activating additional cells to handle rising traffic—it surfaces a recommendation through the dashboard interface. These suggestions are presented alongside relevant context and supporting metrics but are not applied automatically; engineers remain responsible for reviewing and approving any changes. Here is an example recommendation detailing a traffic-steering policy for a specific cell based on predicted demand.

“Cellular network engineers would traditionally spend hours manually analysing and optimising a network’s performance. The O-RAN framework, enhanced by Wolfram’s rApp, transforms this paradigm completely. It not only reduces intervention time from hours to mere seconds, but also enables proactive, real-time network optimisation based on actual usage patterns. This represents a fundamental shift from manual troubleshooting to intelligent, automated network management.”

—Tony Aristeidou, Lead Developer of Wolfram’s rApp

The Promise of Open 5G: Higher Speeds, Exciting Applications

Tests conducted during the CORE project trial in Cambridgeshire demonstrated that the open 5G network consistently achieved download speeds exceeding 700 Mbps with sub-10-millisecond latency and 100% uptime during live operation. These results were obtained using commercially available devices under typical deployment conditions, not lab-simulated scenarios.

Performance was well above the ITU’s recommended 5G benchmark of 100 Mbps for user-experienced throughput and outperformed trial speeds reported in similar UK Open RAN pilots, such as Three UK’s 520 Mbps deployment in Glasgow—confirming that Open RAN configurations can deliver production-grade connectivity in high-demand settings.

To evaluate network performance under immersive, high-bandwidth conditions, the CORE consortium organised a public augmented-reality concert trial. A live performance at the Cambridge Corn Exchange was streamed in real time to a second location, where participants wearing Meta Quest and Apple Vision Pro headsets viewed the show as spatial 3D video with synchronised audio.

The feed—captured using custom 8K 3D cameras and streamed over the open 5G network—was delivered with less than 1.5 seconds of latency and maintained flawless audio-visual sync across multiple headsets. This setup provided a realistic stress test of the network’s capacity to handle sustained, low-latency, multi-device loads in a public setting.

The successful execution of the augmented-reality concert trial underscores the potential of Open RAN to support next-generation applications that demand both high throughput and ultra-low latency. It also validated the ability of a multi-vendor, standards-compliant architecture to deliver consistent performance under real-world load conditions.

While this trial was limited in geographic scope, the results provide a working proof of concept for scalable, interoperable network design. By adding software-defined intelligence through Wolfram’s rApp, the project showed that intelligent optimisation and real-time responsiveness can coexist—a model for broader adoption of adaptive 5G deployments across the UK.

“By investigating an O-RAN neutral host solution, the network will be able to support multiple mobile operators over a single site. This will encourage mobile network supply chain diversification—reducing costs for deploying and operating a network, and opening up business opportunities in the O-RAN ecosystem.”

— Michael Stevens, Connecting Cambridgeshire’s Strategy & Partnership Manager

Ready to Build Smarter Networks?

The CORE Project proved two things: that Wolfram’s full technology stack can run natively inside an Open RAN environment—powering rApps, dashboards and machine learning–driven optimisation—and that Wolfram can deliver those results while working closely with industry partners and government-led initiatives. Together, these capabilities position Wolfram Consulting as a partner ready to help operators and vendors turn network data into real-time intelligence and adaptive 5G solutions.

Contact Wolfram Consulting to put the full Wolfram tech stack to work inside Open RAN—delivering predictive optimisation and actionable insights out of the box.

Implementing Augmented Engineering with Hybrid AI: A Collaboration between Capgemini and Wolfram

Client Results (11)News (1)

Capgemini Engineering and Wolfram Research are developing a co-scientist framework: a tool designed to support engineers working on complex physical systems. Drawing on shared strengths in symbolic computation, generative AI and systems engineering, the co-scientist helps translate engineering intent into executable, verifiable computations.

This project is part of Capgemini’s broader Augmented Engineering strategy, an initiative that applies hybrid AI techniques to real-world engineering challenges. By combining Wolfram’s expertise in symbolic computation with generative AI, the co-scientist helps teams engage with complex problems earlier in the design process—making it easier to refine assumptions and address critical risks before they compound.

Natural Language In, Symbolic Logic Out

The co-scientist is designed to bridge natural language input and computational output. By combining large language models with Wolfram’s symbolic computation and curated knowledgebase, it allows engineers to ask domain-specific questions in plain language and receive results in the form of executable code, equations or dynamic models that can be verified and reused.

Using the co-scientist feels less like querying a search engine and more like working with a technically fluent collaborator. Engineers can describe the problem in their own words—“simulate thermal behavior under load” or “optimize actuator response time”—and receive results they can validate immediately. Behind the scenes, the co-scientist generates Wolfram Language code and combines existing models, Wolfram Language code and computable data to return outputs that are not just plausible but logically structured and derived from verifiable computation.

Building Trust with Hybrid AI

This collaboration puts hybrid AI to practical use by combining the flexibility of language models with the structure of symbolic reasoning and computational modeling. Instead of generating surface-level responses, the system can explain its logic and return results that hold up in real-world settings like aerospace or industrial automation.

In regulated or high-risk environments, outputs must be traceable and reproducible. Hybrid AI systems built on symbolic foundations allow teams to audit every step: how an equation was derived, what assumptions were embedded and how the result fits within engineering constraints. That’s not just helpful—it’s required in science and engineering.

From Acceleration to Transformation

The co-scientist doesn’t just accelerate existing workflows—it shifts how engineers and researchers approach complex challenges. Instead of translating a question into technical requirements and then into code, users can work at the level of intent, refining the problem itself as they explore possible solutions. That creates space for earlier insights, faster course corrections and a more iterative, computationally grounded design process.

As this collaboration continues, Wolfram’s computational framework keeps the co-scientist anchored in formal logic and verifiable output—qualities that matter in domains where failure isn’t an option. From engineering design to sustainability analysis, the co-scientist is already showing how generative AI can shift from surface-level response to real computational utility.

Turning a Research Challenge into a Computational Solution

Client Results (11)

Cancer researchers aren’t short on data, but they are often overwhelmed by it. The Cancer Genome Atlas (TCGA) contains more than 2.5 petabytes of genomic, clinical and imaging data across 33 cancer types. For those in the medical and research communities—especially without formal computational training—making practical use of that data remains a significant challenge.

As a result, a valuable resource remains underutilized.

Dr. Jane Shen-Gunther, a gynecologic oncologist and researcher with expertise in computational genomics, encountered these limitations firsthand. Drawing on her deep expertise, she recognized the pressing need for a more accessible, unified method to access and analyze genomic and imaging data from multiple sources.

“The TCGA, The Cancer Imaging Archive (TCIA) and the Genomic Data Commons (GDC) are essentially three goldmines of cancer data,” she said. “However, the data have been underutilized by researchers due to data access barriers. I wanted to break down this barrier.”

Shen-Gunther partnered with Wolfram Consulting Group to design the TCGADataTool, a Wolfram Language–based interface that simplifies access to TCGA cancer datasets.

Designing a Research-Ready Tool with Wolfram Consulting

Drawing on prior experience with the consulting team, Shen-Gunther worked with Wolfram developers to design a custom paclet to streamline data access and clinical research workflows while remaining usable for individuals without extensive programming experience.

Shen-Gunther explained, “Mathematica can handle almost all file types, so this was vital for the success of the project. It also has advanced machine learning functions (predictive modeling), statistical functions that can analyze, visualize, animate and model the data.”

Interface and Data Retrieval — The guided interface (TCGADataToolUserInterface) allows users to select datasets, review available properties and launch key functions without writing code. Built-in routines support batch retrieval of genomic data from the GDC and imaging data from TCIA, including scans and histological slides associated with TCGA studies.

Data Preparation and Modeling — Processing functions like cleanRawData and pullDataSlice prepare structured inputs for analysis by standardizing formats and isolating relevant variables. Modeling tools enable users to identify potential predictors, visualize candidate features, generate design matrices and build models entirely within the Wolfram environment.

Visualization Tools — The paclet includes support for swimmer plots, overall survival plots and progression-free survival plots, helping researchers visualize clinical outcomes and stratify cases by disease progression or treatment response.

By combining domain-specific requirements with Wolfram Language’s built-in support for data processing and analysis, the paclet makes it possible for researchers without programming experience to work with genomic and imaging datasets that would otherwise require advanced technical skills. This shift enables researchers without programming experience to work directly with TCGA data using computational methods previously out of reach.

Moving from Complexity to Capability

The TCGADataTool was developed in response to specific challenges Shen-Gunther encountered while working with genomic and imaging data. She emphasized the importance of designing a tool that could support clinical research workflows without requiring a background in programming—and saw the result as both accessible and technically robust.

By designing a tool that supports clinical research without requiring fluency in code, Wolfram Consulting Group delivered a solution tailored to the needs of domain experts—extending the reach of high-throughput data into contexts where traditional software workflows often fall short.

“The [Wolfram team] carefully and thoughtfully developed technical solutions at every step and created a beautiful, easily accessible product,” said Shen-Gunther. “Their expertise in data science and user-interface development was essential to the success of the paclet.”

Rather than offering a generalized platform, Wolfram Consulting delivered a focused, researcher-specific application that addresses both technical complexity and day-to-day usability. That model—identifying key obstacles, understanding the research workflow and delivering a targeted solution—can be extended to other biomedical contexts where access to large datasets is essential but often limited by tool complexity.

If your team is facing similar data access or analysis challenges, contact Wolfram Consulting Group for a solution tailored to your research environment.

Preparing for a Future with Generative AI

Insights (8)

AI hype has inundated the business world, but let’s be honest: most organizations still aren’t deploying it effectively. Boston Consulting Group reports that nearly 40% of businesses investing in AI are walking away empty-handed. Why? Not because of bad algorithms, but because they’re drowning in data without the tools to make sense of it.

As Wolfram Research CEO Stephen Wolfram recently noted, a large language model (LLM) can produce results that are often “statistically plausible.” Yet, he warns, “it certainly doesn’t mean that all the facts and computations it confidently trots out are necessarily correct.”

Enter Wolfram Consulting Group. We take AI from hype to reality, combining serious computational infrastructure with tools like retrieval-augmented generation (RAG), Wolfram Language–powered analysis and precision data curation. The result? AI that’s an actual business asset—not just another buzzword.

Optimizing Data Pipelines for AI-Driven Insights

Generative AI and LLMs are everywhere, promising to transform customer service, crunch unstructured data and tackle cognitive tasks. But here’s the hard truth: fine-tuning datasets alone doesn’t cut it.

Wolfram Consulting Group takes a smarter approach with tools like RIG, where LLMs dynamically pull trustworthy data—including your proprietary information—on demand. Wolfram doesn’t limit RIG to document-based data, however, but also includes sources that compute bespoke answers using models, digital twins and anything that is computable. It’s a smarter approach—and one Wolfram has pioneered with integrations like Wolfram|Alpha, which lets LLMs execute precise computations through Wolfram Language.

But let’s not pretend this is easy. Juggling multiple data sources can quickly turn into a mess: errors, inefficiencies and results you can’t trust. That’s where Wolfram comes in. By centralizing computational knowledge and leveraging tools like the Wolfram Knowledgebase—packed with verified, real-time external data—we cut through the noise and deliver scalable, accurate AI applications that work.

Leveraging the Wolfram Knowledgebase

No business operates in a vacuum. Relying solely on internal data keeps you stuck in a silo—cut off from the broader context you need to make informed decisions.

The Wolfram Knowledgebase solves that dilemma. It’s not just data—it’s curated, reliable and ready for computation. Spanning everything from economics to physics to cultural trends, it integrates seamlessly with the Wolfram tech stack. Unlike other third-party data sources that leave you wrestling with raw, unstructured information, Wolfram gives you clean, organized datasets you can put to work immediately.

What does this mean for your business? Faster analysis, smarter visualizations and business intelligence you can trust. Whether it’s cross-referencing energy data or uncovering financial trends, Wolfram’s approach transforms mountains of complex data into clear, actionable strategies.

Maximizing AI Impact in Enterprise Environments

Businesses need more than one-size-fits-all solutions. Wolfram Research delivers enterprise-level solutions tailored for organizations that demand results. With tools like Wolfram Enterprise Private Cloud (EPC) and Wolfram|Alpha, we provide the infrastructure and data integration businesses need to scale AI reliably and effectively.

What else sets Wolfram apart? We make existing AI models like GPT-4 and Claude 3 smarter. Wolfram’s flexible, integrated platform works seamlessly in public and private environments, giving businesses control over their data, their analysis and—most importantly—their results.

Bottom line: Wolfram delivers. Whether through cloud infrastructure or curated datasets, we turn generative AI into a scalable, precise business asset. No hype, no hand-waving—just AI that becomes your workhorse.

The Future of AI: Powered by Wolfram

Wolfram Consulting doesn’t chase AI hype—we build tools that make AI results verifiable and production-ready. From risk analysis to workflow automation, we turn generative AI into a real asset with clear scope, fast deployment and measurable results.

With Wolfram at the helm, businesses don’t follow trends—they set them.

Contact Wolfram Consulting Group to learn more about using Wolfram’s tech stack and LLM tools to generate actionable business intelligence.